Apple recently announced the addition of LiDAR scanners (Light Detection and Ranging) to the iPad Pro’s main camera unit.

The LiDAR scanner is being marketed to Apple’s user base as a “breakthrough” technology. LiDAR sensors, also referred to as 3D ‘Time of Flight’ (ToF) sensors, are already widely utilized in other fields such as autonomous cars for rapid and accurate depth sensing.

Apple already has a large ecosystem of AR capable devices since the launch of ARKit in 2017 for iOS. This AR capability was achieved by a feature called ‘world tracking’ based on a technique called visual-inertial odometry. The ARkit uses the iPhone or iPad’s camera combined with computer vision analysis to detect and track notable features in a scene, such as flat surfaces or objects. The tracking data is then compared with the device’s motion sensor data to develop a high-precision model of the device’s position and motion relative to the detected features. One of the limitations of the earlier implementation was that it could not be used for generating 3D scan models of the environment without an external peripheral. Now, with an integrated LiDAR scanner, Apple has made the ARkit capable of detecting the environment and generating 3D models of the same.

Apple’s launch of a device with integrated LiDAR scanners has generated plenty of excitement. Apple may incorporate the LiDAR scanner in future releases of the iPhone as well as the oft rumored AR/VR headset and Apple Glasses. That excitement may cause other companies to introduce similar LiDAR features, possibly for environment recognition and imaging. It may also trigger alternative approaches for environment recognition, such as use of Radar. Finally, having these sensors and their capabilities in mobile devices may further the development of augmented reality applications.

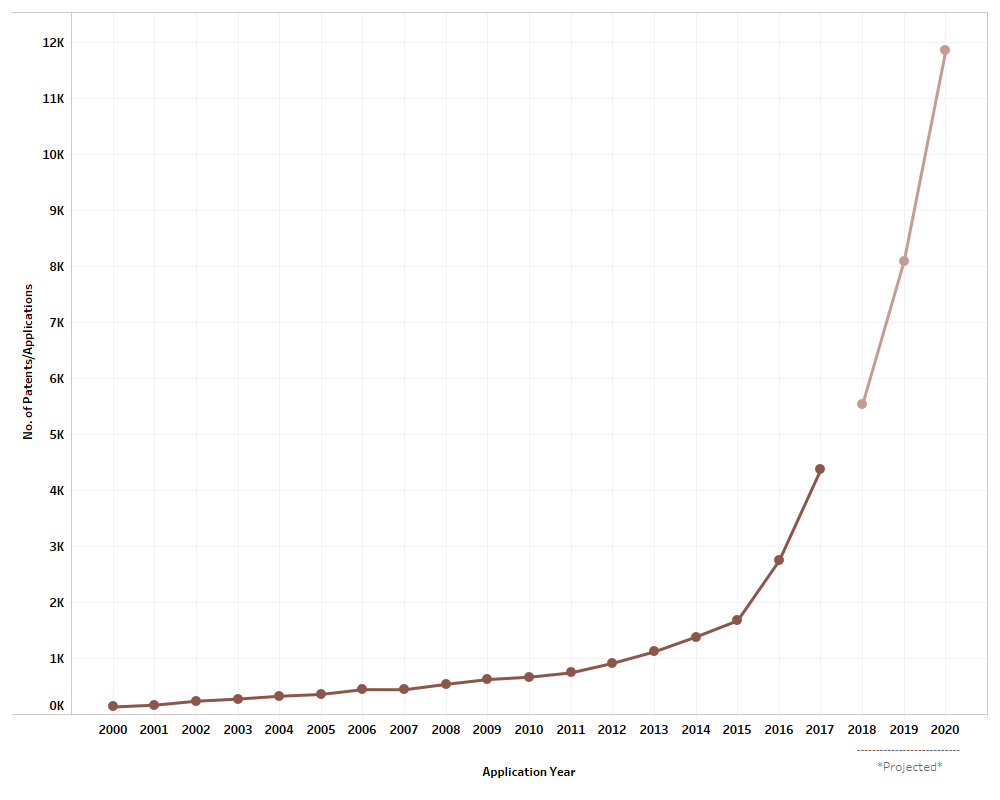

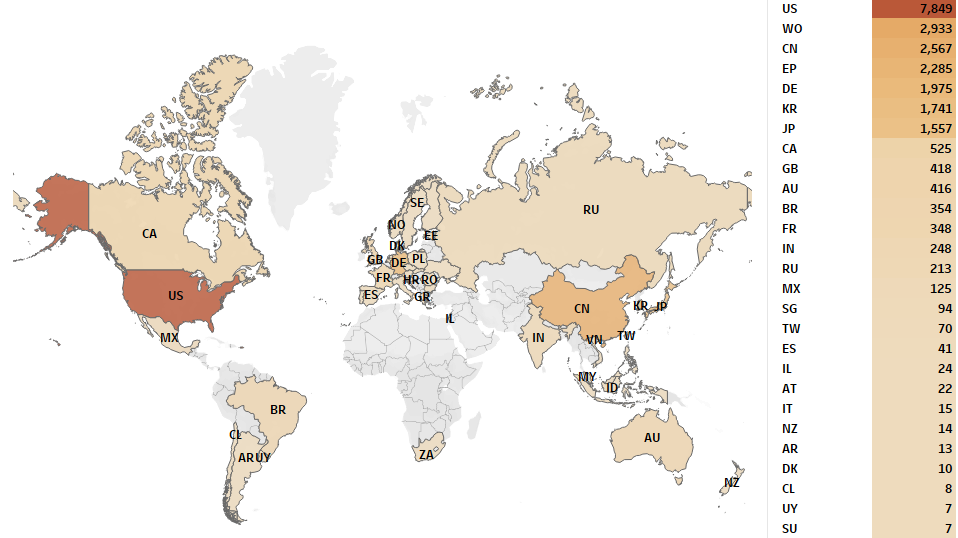

MaxVal recently conducted research for patents and applications claiming LiDAR systems/methods so that we could identify trends and get a glimpse into the future activity of Apple and its competitors in the mobile device segment. As can be seen from Fig. 1, a dramatic increase in patent filing activity was observed for the period of 2015-2020. This trend suggests an increased interest and research activity related to LiDAR systems for the near future.

Fig. 1: Application trends in patent activity related to LiDARs.

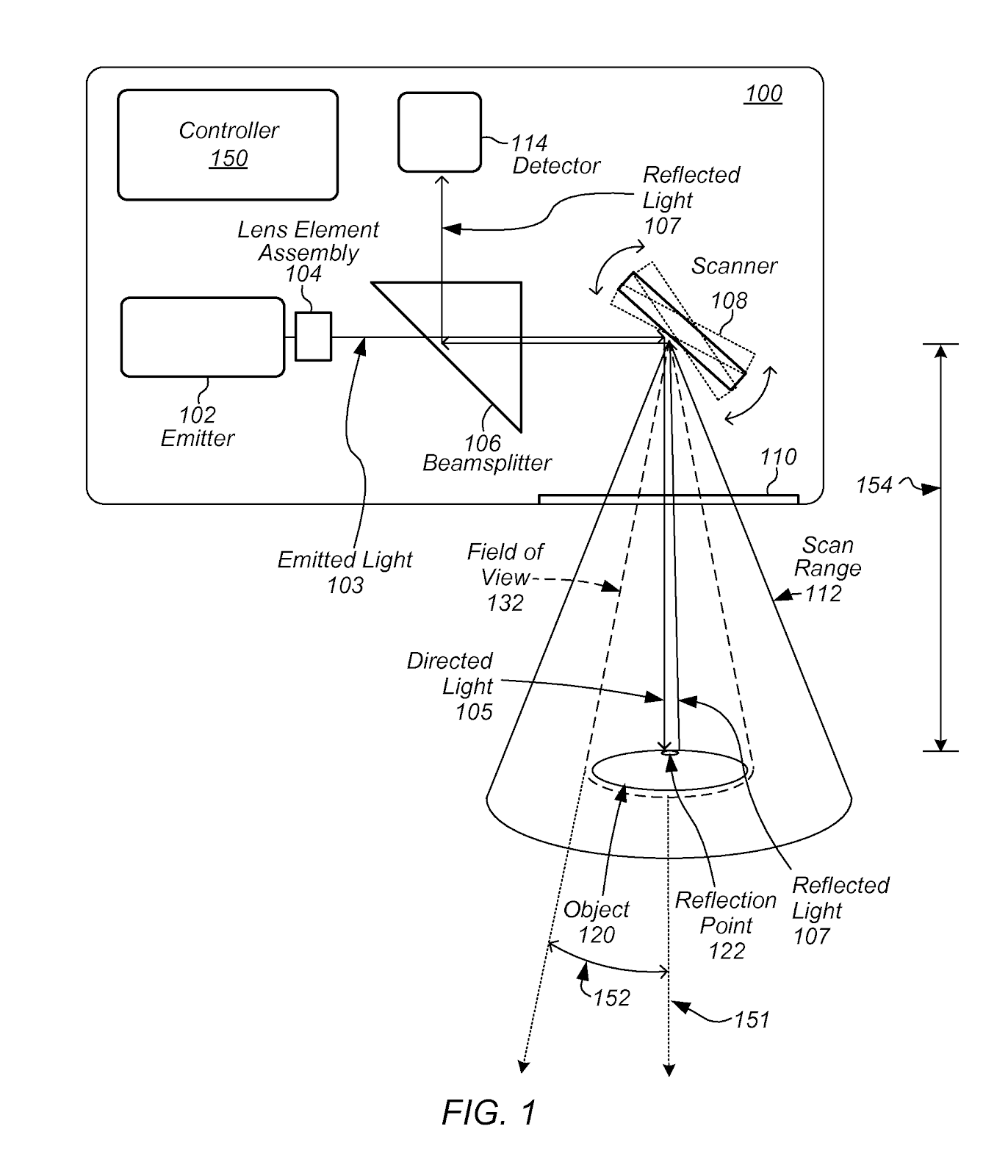

As shown in Fig. 2, the U.S. is the primary market for this technology followed by China, Europe, South Korea and Japan. Significantly, applications filed in the U.S. were about three times higher than in China.

Fig. 2: Market for patents related to LiDARs.

Fig. 2: Market for patents related to LiDARs.

The assignees were spread over many industries such as automotive, aerospace, industrial equipment and consumer electronics. Apple’s 73 worldwide patents and applications claiming LiDAR scanner system can be grouped into three broad categories: (i) the first group of patents/publications focus on vehicle related applications; (ii) the second group focuses on the functional aspects of LiDAR that may be applicable for both vehicles and low powered devices like mobile phones; and (iii) the third group focuses on LiDAR sensors for mobile devices and/or enabling novel functions in a mobile device with the use of LiDAR. Selected cases that fall into the third category are discussed below.

US Patent No. 10,107,914 titled, “Actuated optical element for light beam scanning device”, relates to a lens assembly in a LiDAR scanner with multiple lenses. The patent discloses a single sensor to accomplish both methods described below. One or more of these lenses are used in conjunction with a controlled actuator to adjust the divergence of projected light beams. The first is to scan a wide area to detect objects, similar to the LiDAR sensor introduced in the new iPAD Pro. The second uses a narrow divergence scanning beam to generate detailed point clouds of the detected objects, similar to the True Depth Camera system used for FaceID.

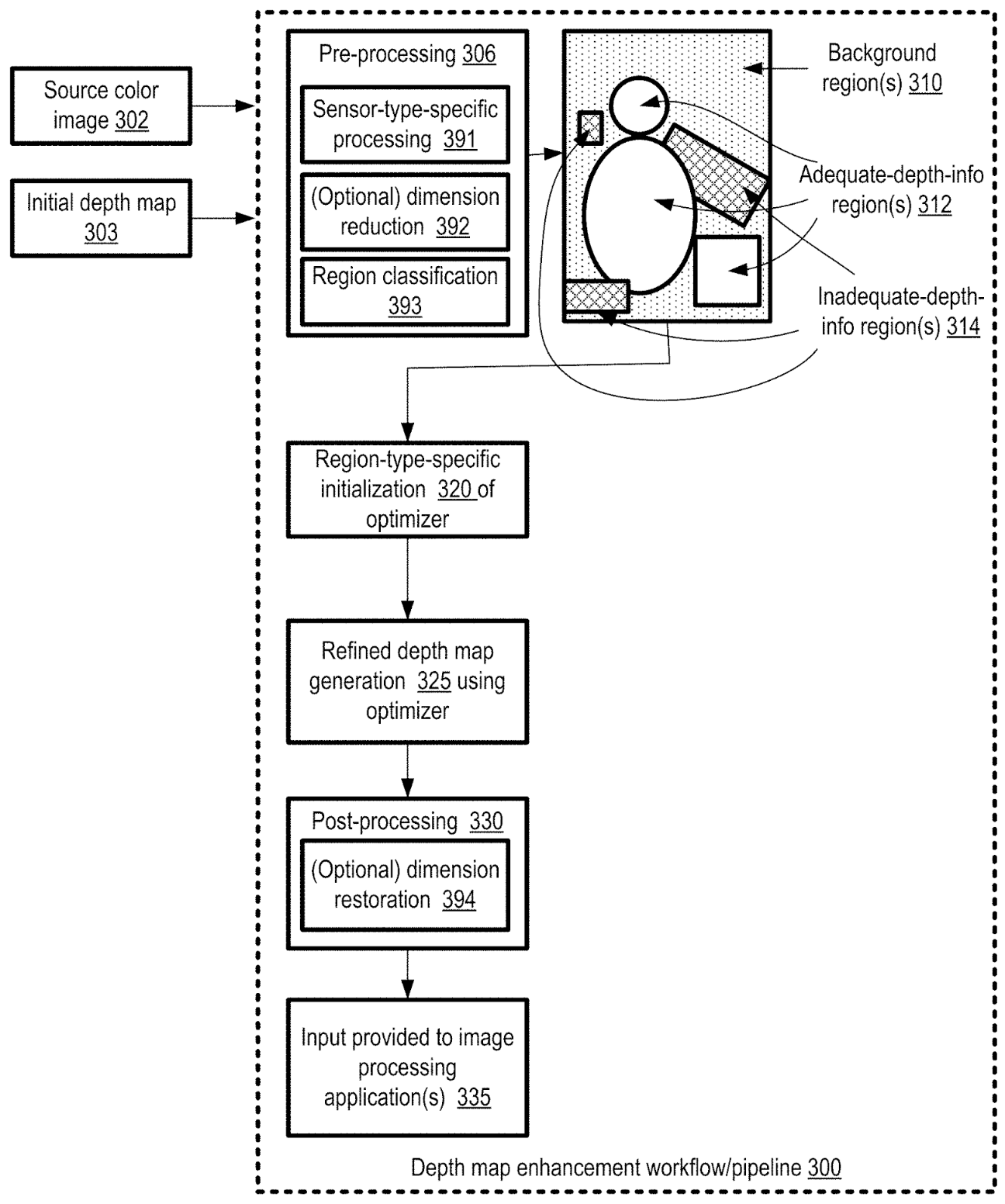

US Patent Publication No. 20190362511A1 titled, “Efficient scene depth map enhancement for low power devices”, relates to enhancing depth maps generated using LiDAR and other sensors on a low powered device, such as a mobile phone or a tablet. The disclosed method is allegedly more efficient than previous methods, because it segments the initial depth map into specific regions like background, adequate depth regions and inadequate depth regions and the only performs further processing and analysis on the inadequate regions, thereby minimizing the resources used by the device. An overview of the phases of a workflow that may be used for depth map enhancement, according to at least some embodiments of this patent, is disclosed below.

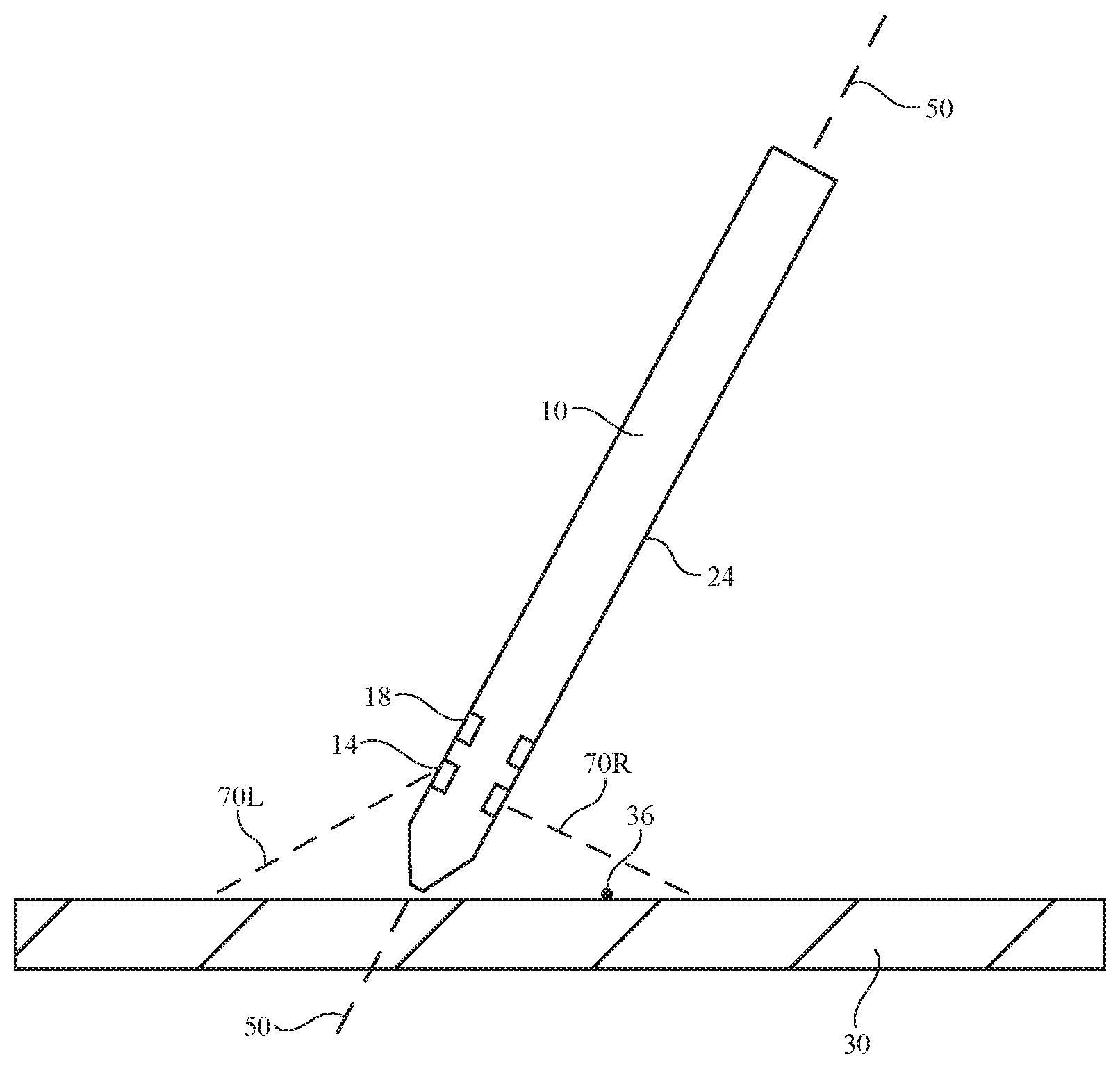

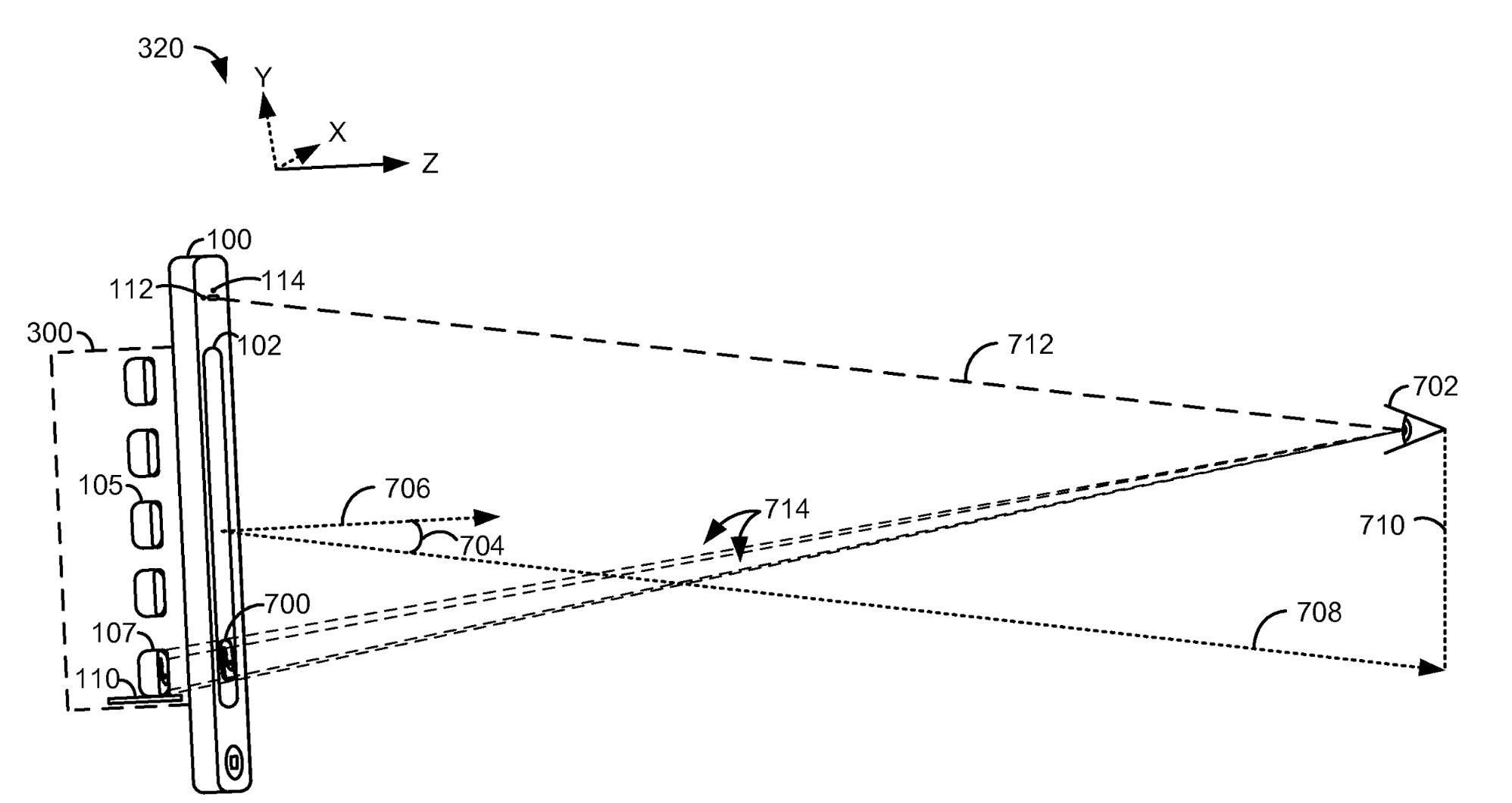

US Patent No. 10,592,010 entitled, “Electronic device system with input tracking and visual output”, relates to tracking user input by an electronic device using various sensors, which may also include a LiDAR unit. The patent discloses the ability to track a user input using LiDAR. Current Apple devices (e.g. iPhone and iPad) are all equipped with a True Depth Camera system for FaceID. The working principle and components included in the True Depth Camera system are almost similar to the newly introduced LiDAR system, but with more densely packed IR projections. Thus, these devices may be ready for implementing user input tracking as claimed in ’010 patent. A representative image with a device packed with the sensor is shown below.

US Patent No. 9,411,413 titled, “Three dimensional user interface effects on a display”, relates to a display being able to generate a three dimensional user interface dynamically based on the detected position of the user’s head. The patent discloses using a LiDAR sensor to detect the position of the user’s head, combining this data with the data from other sensors for detection motion and relative positioning of the device with respect to the user’s head. The patent proposes combining the head position data with the motion data for generating a more realistic virtual 3D depiction of the objects on the device’s display.

As disclosed from the few example cases above, Apple’s publications relating to the LiDAR sensors can go beyond environment recognition for AR/VR applications. It could be adapted for enabling a user to interact with the device, in addition to providing an improved visual experience. Apple’s capabilities from ARKit, FaceID and its foray into LiDAR scanners in mobile devices gives it a competitive advantage. It should be noted that other companies such as Google may not be far behind. For example, Project Soli from Google’s Advanced Technology and Projects (ATAP) group, uses a RADAR chip instead of LiDAR, to achieve similar objectives.

Contact us to request a copy of a landscape report on LiDAR systems. To know more about our search services, please click here.

Let us know what you’d like to see in our next blog/webinar. Take the Reader Poll